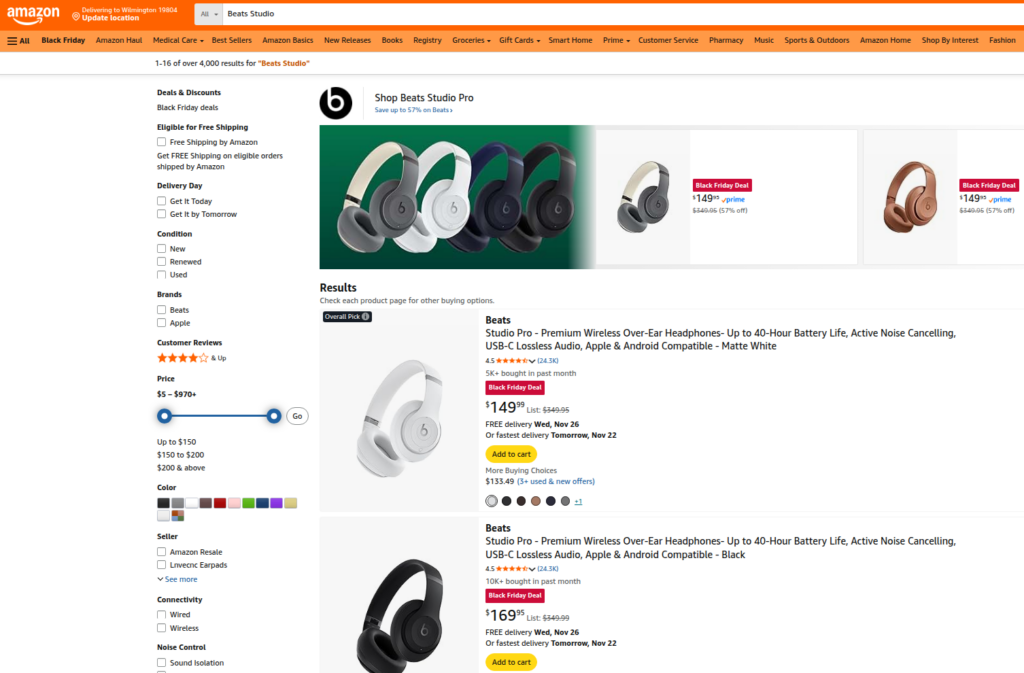

Web crawler APIs have become increasingly prevalent in the e-commerce sector, serving as a core tool for businesses to scrape e-commerce data, implement price monitoring, conduct review analysis, and track inventory levels.In particular, an ecommerce crawler API helps teams scale reliable collection when websites enforce strict anti-bot rules.

In the fiercely competitive e-commerce landscape, accessing accurate and real-time e-commerce data is the cornerstone of strategic decision-making. Therefore, professional web crawler APIs—thanks to high efficiency and strong anti-anti-scraping capabilities—often outperform manual crawling or basic scripts. Moreover, they can overcome anti-bot barriers, deliver structured e-commerce data, and support actionable decisions. This article explains how an ecommerce crawler API supports e-commerce scenarios, including core data types, anti-scraping challenges, parameter configuration, structured extraction, storage, and compliance.

Core E-Commerce Data Types with a Web Crawler API

E-commerce websites host vast volumes of data, yet not all data points hold equal value. As a result, enterprises should prioritize high-value e-commerce data and extract insights that directly affect revenue, satisfaction, and competitive advantage.

- Pricing Data (Core Target of Crawler APIs): Pricing (base, discount, promotion) supports competitive pricing strategies. For example, retailers can monitor competitor prices for dynamic pricing, while brands can detect unauthorized resellers or price violations.

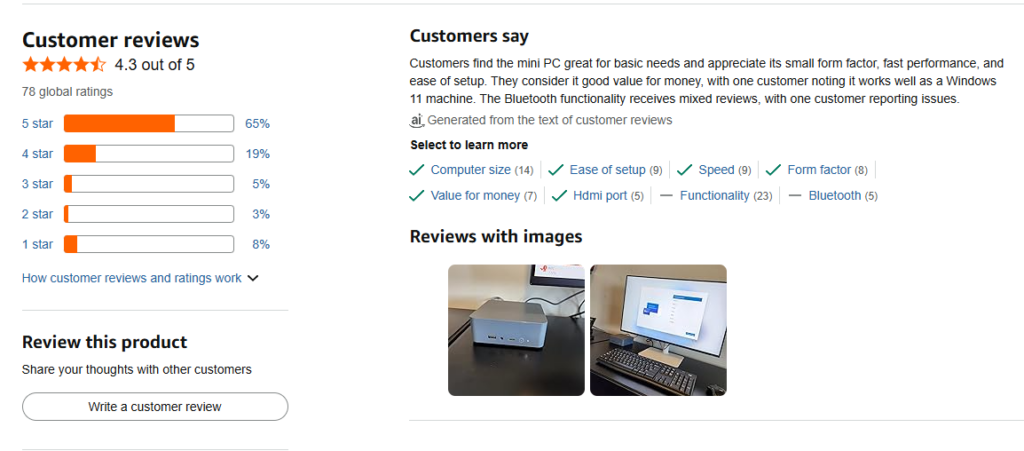

- Customer Reviews and Ratings (Sentiment Analysis): Review scraping enables sentiment mining for strengths and pain points, supporting product iteration, marketing optimization, and service improvements. In addition, cross-platform rating comparisons help benchmarking.

- Product Availability and Inventory Levels (Real-Time Tracking): Inventory tracking supports restock alerts, stockout detection, and competitor-stockout opportunity capture. Meanwhile, affiliates can avoid promoting out-of-stock products.

- Product Details and Variants (Structured Catalog Data): SKU, description, images, variants, and specs are essential for catalog quality, competitor feature comparison, and listing SEO consistency.

- Promotional Data (Competitor Analysis): Coupons, bundles, and seasonal promos reflect competitor strategy. Consequently, enterprises can react faster and identify opportunities.

Web Crawler API Solutions for E-Commerce Anti-Scraping

E-commerce sites protect prices, reviews, and inventory aggressively. However, a mature ecommerce crawler API typically mitigates these barriers through built-in capabilities.

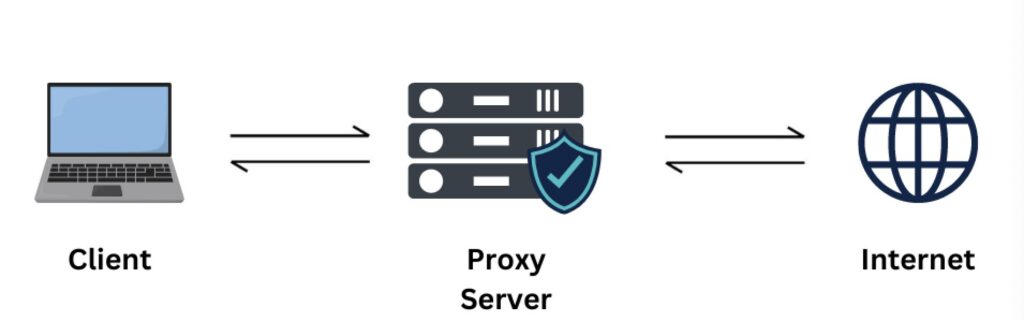

- IP Blocking and Rate Limiting : Platforms detect request bursts and block IPs. Therefore, leading crawler APIs use IP rotation plus configurable delays to reduce blocking risk.

- CAPTCHA: CAPTCHAs interrupt extraction. In practice, many professional crawler APIs provide built-in CAPTCHA handling for common challenges to reduce manual intervention.

- Dynamic Rendering (JavaScript): Modern e-commerce sites use React/Vue/Angular and load data via JS. Thus, HTML-only crawlers can miss AJAX-loaded prices/reviews, while crawler APIs may provide headless rendering to capture dynamic content.

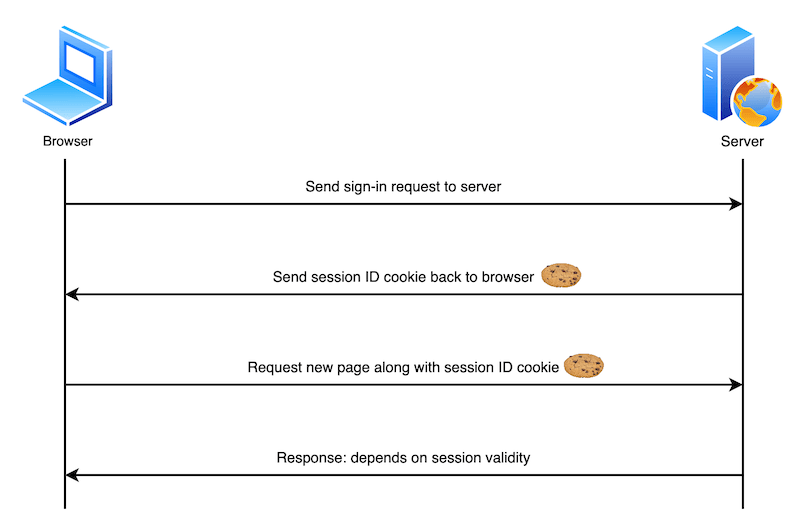

- Session Tracking and Cookies: Sites track sessions, cookies, and fingerprints. Accordingly, crawler APIs often support session persistence and user-behavior simulation to reduce suspicion.

- robots.txt Restrictions (Compliance): robots.txt defines crawling permissions. Therefore, compliance-aware teams configure robots checks and avoid restricted areas.

Outbound reference (place here for compliance context): Robots Exclusion Protocol (RFC 9309)

<p><strong>Reference:</strong> <a href="https://www.rfc-editor.org/rfc/rfc9309" target="_blank" rel="nofollow noopener">Robots Exclusion Protocol (RFC 9309)</a></p>Ecommerce Crawler API Parameter Configuration for E-Commerce: Region, Language, Device

E-commerce data varies by region, language, and device. So, parameter consistency is critical to avoid distorted datasets.

- Region: Prices/availability differ by geolocation. For example, the same SKU can vary across US/EU/UK. Use region-matching IP and region parameters to keep pricing and inventory accurate.

- Language: Set language (e.g., via Accept-Language) to match target markets. Consequently, review analysis and copy insights remain market-relevant.

- Device: Mobile/desktop layouts and promotions differ. Therefore, set device/user-agent intentionally, especially for mobile-exclusive discounts.

Outbound reference (place here for HTTP request fundamentals): MDN HTTP overview

<p><strong>Reference:</strong> <a href="https://developer.mozilla.org/en-US/docs/Web/HTTP" target="_blank" rel="nofollow noopener">HTTP request/response fundamentals (MDN)</a></p>

Web Crawler API Data Extraction: JSON-LD, HTML, and Platform APIs

The value of e-commerce data depends on structure. Accordingly, choose extraction methods based on availability and stability.

- JSON-LD (Preferred when available): Many product pages embed JSON-LD for search engines, including price, review, availability, and SKU. As a result, parsing JSON-LD can be faster and less error-prone than scraping raw HTML.

Outbound reference (place here for JSON-LD vocabulary): Schema.org Product

Example structured output (illustrative format):

{

"total_items": 24,

"page": 1,

"per_page": 10,

"products": [

{

"product_id": "B09P2Y797Q",

"title": "无线降噪蓝牙耳机",

"current_price": 99.99,

"rating": 4.7,

"review_count": 1256,

"availability": "in_stock",

"source_url": "https://www.amazon.com/dp/B09P2Y797Q"

}

],

"crawl_info": {

"crawl_time": "2026-01-24T14:35:00",

"category_url": "https://www.amazon.com/s?k=wireless+headphones",

"proxy_used": "http://98.76.54.32:8888"

}

}- HTML Parsing (Universal, but fragile): If JSON-LD is missing, use CSS/XPath extraction from HTML. However, selectors may break when sites change classes or layout, so multi-selector fallback and monitoring are recommended.

- Third-Party E-Commerce APIs (Compliance-first supplement): When platforms provide official APIs, they can be structured and stable. Still, they may have strict rate limits, auth requirements, and partial coverage. Use them where available, and use crawler APIs for gaps.

Pagination and Variant Handling in Ecommerce Crawler API Workflows

E-commerce listings often span many pages, and products may have variants. Therefore, handling both is essential for completeness.

Pagination Handling

Page-number pagination: Crawl ?page=2 style URLs until completion, while enforcing max-page guards to avoid loops.

Infinite scroll: Use headless rendering + scroll simulation, or call the underlying XHR endpoints when identifiable. Meanwhile, rate limits and delays should prevent blocking.

Product Variant Handling (SKU/Size/Color)

Variants can have different SKU, price, and availability. Thus, variant scraping should simulate selection (render mode) or call variant APIs if available. Store variants as a structured array under the parent product for analysis consistency.

E-Commerce Data Change Detection: Price and Inventory Alerts with an Ecommerce Crawler API

Change detection turns raw scraping into operational value. For example, teams can trigger alerts when prices change or inventory crosses thresholds.

- Price change alerts: Compare current price vs prior snapshot; alert if change exceeds thresholds (e.g., ≥5%).

- Inventory alerts: Alert on in-stock ↔ out-of-stock changes for restock and stockout reactions.

- Review/rating alerts: Alert on rating drops or spikes in negative reviews to support rapid response.

Importantly, use reasonable intervals to balance freshness and anti-bot risk; also set thresholds to reduce noisy alerts.

E-Commerce Crawler Data Storage: Raw Snapshots + Parsed Fields

A “raw snapshots + parsed fields” model balances compliance, analyzability, and recoverability.

- Raw snapshots (HTML / JSON-LD / screenshots): support audits and re-parsing without re-crawling. Store with metadata (time/region/device).

- Parsed fields (price/SKU/reviews/availability): store in queryable DBs for analysis and dashboards.

Best practices:

- Use retention policies to control storage cost.

- Compress snapshots (e.g., gzip).

- Encrypt or anonymize sensitive content where applicable.

Practical Implementation Process for E-Commerce Crawler APIs

Next, we use Scrapy to practically scrape product-like page data patterns, and show how teams typically configure delays, UAs, and proxies. (Note: large platforms may restrict automated access in their terms; configure and use responsibly.)

1. Environment Installation

pip install scrapy

pip install scrapy-splash2. Create a Scrapy Project

scrapy startproject amazon_scraper

cd amazon_scraper

scrapy genspider amazon_spider amazon.com3. Core configuration (settings.py)

# -*- coding: utf-8 -*-

import random

BOT_NAME = 'amazon_scraper'

SPIDER_MODULES = ['amazon_scraper.spiders']

NEWSPIDER_MODULE = 'amazon_scraper.spiders'

ROBOTSTXT_OBEY = True

DOWNLOAD_DELAY = 3

USER_AGENT_LIST = [

'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/120.0.0.0 Safari/537.36',

'Mozilla/5.0 (Macintosh; Intel Mac OS X 14_2) AppleWebKit/605.1.15 (KHTML, like Gecko) Version/17.1 Safari/605.1.15',

'Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/119.0.0.0 Safari/537.36'

]

USER_AGENT = random.choice(USER_AGENT_LIST)

DOWNLOADER_MIDDLEWARES = {

'amazon_scraper.middlewares.ProxyMiddleware': 543,

'scrapy.downloadermiddlewares.useragent.UserAgentMiddleware': None,

}

PROXY_POOL = [

'http://123.45.67.89:8080',

'http://98.76.54.32:8888',

'http://username:[email protected]:9090'

]

LOG_LEVEL = 'INFO'

FEED_EXPORT_ENCODING = 'utf-8'4. Custom Proxy Middleware (middlewares.py)

# -*- coding: utf-8 -*-

import random

from scrapy.utils.project import get_project_settings

class ProxyMiddleware:

def __init__(self):

settings = get_project_settings()

self.proxy_pool = settings.get("PROXY_POOL", [])

def process_request(self, request, spider):

if not self.proxy_pool:

return

proxy = random.choice(self.proxy_pool)

request.meta['proxy'] = proxy

spider.logger.info(f'Proxy in use: {proxy}')

def process_response(self, request, response, spider):

if response.status != 200 and self.proxy_pool:

spider.logger.warning('Non-200 response; rotate proxy and retry')

request.meta['proxy'] = random.choice(self.proxy_pool)

return request

return response5. Spider (amazon_spider.py)

# -*- coding: utf-8 -*-

import scrapy

class AmazonSpider(scrapy.Spider):

name = 'amazon_spider'

allowed_domains = ['amazon.com']

start_urls = ['https://www.amazon.com/dp/B09P2Y797Q']

def parse(self, response):

product_title = (response.css('#productTitle::text').get() or '').strip() or 'N/A'

price = response.css('.a-offscreen::text').get() or response.css('.a-price-whole::text').get() or 'N/A'

availability = (response.css('#availability span::text').get() or '').strip() or 'N/A'

rating_text = response.css('.a-icon-alt::text').get() or ''

rating = rating_text.split(' ')[0] if rating_text else 'N/A'

review_text = response.css('#acrCustomerReviewText::text').get() or ''

review_count = review_text.split(' ')[0] if review_text else 'N/A'

yield {

'Product Title': product_title,

'Price': price,

'Inventory Status': availability,

'Rating': rating,

'Review Count': review_count,

'Scraped URL': response.url,

}Run:

scrapy crawl amazon_spider -o products.jsonFAQ: High-Frequency Issues in E-Commerce Crawling with an Ecommerce Crawler API

Q1: IP blocked—cannot continue crawling

Therefore, combine delays + UA rotation and use a high-quality proxy pool with rotation. Reference:

Building a Proxy Pool: Crawler Proxy Pool

Q2: CAPTCHA blocks the crawler

Use third-party solving for simple CAPTCHAs; for complex CAPTCHAs, use Playwright/Selenium or professional APIs with integrated handling. Reference:

MonkeyOCR Large Model OCR: Which Large Model OCR Is Better? (Part 1)

Q3: JS-rendered pages hide price/inventory

Use headless rendering (Scrapy-Playwright/Splash) or call underlying JSON endpoints directly when feasible.

Q4: Page structure changes break selectors

Prefer stable IDs/attributes, add fallback selectors, and add monitoring + alerts for empty fields.

Q5: Missing pagination or variants

Handle both page traversal and variant selection. Additionally, validate variant availability combinations to avoid omissions.

Q6: Too slow for near-real-time monitoring

Use distributed crawling, reduce unnecessary middleware, and use tiered schedules (core SKUs more frequent, non-core less frequent).

Q7: Compliance risks (robots.txt / terms / sensitive data)

Enable robots compliance (ROBOTSTXT_OBEY=True), avoid sensitive personal data, and apply retention/anonymization policies.

Related Guides

SerpAPI Amazon Competitor Analysis: Extract E-commerce Data and Build SEO Strategies

What is a Web Scraping API? A Complete Guide for Developers

Web Scraping API Cost Control: Caching, Deduplication, and Budget Governance

Generative Engine Optimization (GEO): The Transition from SEO to AI Search

Web Crawling & Data Collection Basics Guide

Web Scraping API Vendor Comparison: How to Choose a Highly Reliable and Scalable Solution