This article is part of our SERP API production best practices series and focuses on SERP API core technologies used in real-world systems.

In the previous article, SERP API Beginner’s Guide, we explained what a SERP API is, how it works, and the scenarios where it applies. Another article, The Value and Misconceptions Behind SERP APIs, explored its relationship with web scraping and the value it provides beyond data extraction.

Together, these articles show how SERP APIs make search data accessible, accurate, and real-time.

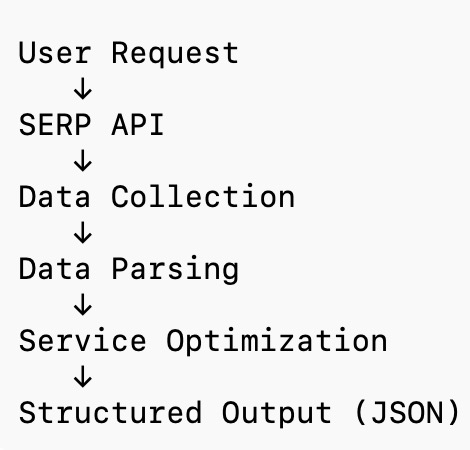

In this article, we dive deeper into SERP API core technologies and explain how a SERP API transforms search engine result pages into structured data through data collection, parsing, optimization, delivery, and compliance.

SERP API Core Technologies Overview

At a high level, a SERP API performs a simple task: convert search engine result pages into structured data.

However, the underlying process is full of engineering challenges, including:

- Constantly evolving anti-scraping defenses such as rate limits and CAPTCHA challenges

- Regional variations in result format and content (different languages, devices, and layouts)

- Frequent content updates such as ads and recommendations requiring real-time data retrieval

- High stability expectations for a commercial-grade service

Due to these challenges, SERP APIs require a comprehensive engineering system to ensure stable data delivery—including data collection, data parsing, service optimization, and security compliance.

SERP API Core Technologies: Data Collection

As the data source of the entire system, data collection is both foundational and one of the most difficult challenges.

This component relies heavily on web scraping technologies, including:

Distributed Proxy Network

To bypass IP-based rate limitations, SERP APIs utilize:

- Large-scale rotating IP pools

- Multiple regions and network exit nodes

- Randomized access patterns

This reduces the chance of being blocked due to high-frequency requests.

Real User Behavior Simulation

Beyond IP checks, search engines enforce CAPTCHA and login verification.

Therefore, scrapers must simulate real user environments via:

- Browser UA, cookies, local language settings

- Randomized request timing and interaction patterns

Concurrency Control and Task Scheduling

To ensure timeliness and stability, SERP APIs use distributed job scheduling and retry mechanisms to guarantee reliable data retrieval at scale.

SERP API Core Technologies: Data Parsing

After overcoming anti-bot defenses and retrieving the raw page, the next challenge is parsing.

Since page structures vary significantly, SERP APIs must:

- Perform JavaScript rendering

- Locate and parse relevant DOM nodes

- Use regex or NLP extraction for special cases

- Identify different result categories like ads, maps, videos, images, etc.

The final output is transformed into a normalized structured format such as JSON, for example:

{

"organic_results": [...],

"ads": [...],

"images": [...],

"videos": [...],

"related_questions": [...],

"local_results": [...],

...

}

SERP API Core Technologies: Service Optimization

A commercial service receiving large-scale user requests cannot rely on a single backend process.

Multiple engineering techniques are applied to ensure high performance and stability:

- Load Balancing — distributes traffic across nodes to improve throughput

- Caching Mechanisms — reduce latency for frequent queries

- Pre-Fetching — proactively crawl trending keywords and update data periodically

- Concurrent Task Execution — leverage distributed and multi-threaded crawling to accelerate performance

- Health Monitoring — detect issues early and ensure long-term service reliability

SERP API Core Technologies: Data Delivery

As the final step in the pipeline, data delivery directly determines user experience.

To support diverse business needs, SERP APIs provide flexible delivery methods, including:

- Real-time querying via API

- SDK support for multiple programming languages

- Data returned in JSON or CSV formats

- Direct integration into dashboards for analytics and monitoring

SERP API Core Technologies: Security & Compliance

This is where SERP APIs differ most from basic scrapers.

To ensure responsible data acquisition, providers must:

- Comply with regional data governance regulations

- Avoid accessing sensitive or protected information

- Respect website anti-robot policies and platform rules

- Maintain logs and risk-control strategies to manage user behavior

SERP API vendors are not only technology providers but also compliance risk bearers.

Conclusion: Why SERP API Core Technologies Matter

SERP APIs make web data collection incredibly easy—just a single line of code.

But behind that simplicity lies a sophisticated engineering infrastructure including:

- Data collection

- Data parsing

- Service optimization

- Data delivery

Most importantly, SERP API vendors ensure legality and risk management on behalf of users.

As AI adoption accelerates, access to real-time internet data becomes essential:

- AI Agents require up-to-date information to execute tasks

- AI chatbots need fresh knowledge to generate accurate responses

SERP APIs will evolve from simple data extraction into an information pipeline—

a real-time knowledge access layer for large language models.

The next article will further explore the role of SERP APIs in the AI ecosystem.